New Technologies are helping us meet global energy demand

Don Stowers, Editor-OGFJ

As the world’s appetite for hydrocarbon-based fuels increases in the next few decades, technology may be our best hope for increasing supplies to keep the wheels of industry turning. Although petroleum production is forecast to increase in the next 10 to 15 years, it is unlikely to keep pace with demand unless technology comes to the rescue, as it always has in the past.

Technology has enabled the oil and gas industry to drill deeper, under higher pressures, and under extremely adverse conditions, all while increasing efficiency. High commodity prices have allowed producers to use new technologies to go back into old sites and get more out of them than was ever thought possible, and it is allowing us to exploit unconventional resources that were previously considered uneconomical to recover.

Innovations in seismic imagery are just one of the developments that has made this all possible. Seismic or electromagnetic cross-well surveys as well as 4D seismic techniques and behind-casing logging are other methods of recovering previously by-passed oil.

I-field and e-field technologies, which rely on advances in electronics and information and communication technologies, may transform the industry over the next 20 years. In addition, the industry is currently exploring the potential of polymer and microbial enhanced oil recovery (MEOR) as techniques for improving recovery rates.

IEA Report

In a recent report, “Resources to Reserves,” the International Energy Agency in Paris discusses the challenges of providing the 1.5 trillion boe of oil and natural gas projected to be required worldwide over the next 25 years - an amount about equal to cumulative production over the past century.

Dismissing the notion that global oil production has peaked or is about to peak, IEA executive director Claude Mandil says that “technological progress has always been the key factor to prove the doomsayers wrong.” He believes technology will enable new resources to be developed cost-effectively, and it will accelerate the implementation of new projects.

“There is no shortage of oil and gas in the ground,” he says. “But quenching the world’s thirst for them will call for major investment in modern technologies.”

The world, according to the study, contains at least 20 trillion boe of oil and gas, about half of it conventional and 5 to 10 trillion boe now technically recoverable. The challenge is to make technically recoverable oil and gas economically recoverable as well.

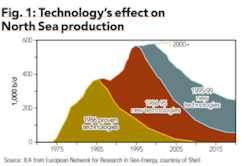

Using North Sea production gains to highlight the contribution of technological advances, the study uses this example to illustrate the effect technology has had on North Sea production as new technologies and reservoir management practices were developed at intervals during the past 20 years (Fig. 1).

Reservoir modeling and simulation

Modeling the earth’s subsurface and predicting reservoir flow are essential to contemporary oil and gas producers. The companies that do this best have a competitive advantage over those that don’t.

The type of sophisticated modeling and analysis is limited by the supporting hardware and software technology. Although significant progress has been made in addressing memory and processor speed in the last decade, the type of models geologists and reservoir engineers require continues to push the capabilities of available technology.

Recent advances in high-performance computing and new software have provided companies with several new options that will allow for more accurate subsurface models that can lead to better predictions of reservoir performance.

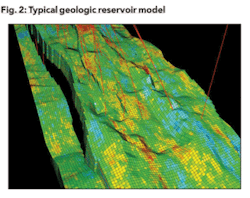

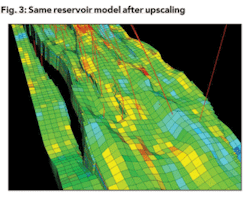

The reservoir model needed by the engineer is different from the one used by the geologist. Geologic models are built at a scale too fine for reservoir simulation, which has led to a practice called “upscaling,” by which the model is generally “coarsened” to reduce the overall number of cells that make up the model. This is based on the assumption that the smaller the model, the faster the reservoir simulator will be able to generate a 20- to 30-year prediction of well and field production.

A coarsened model can mean that reservoir simulation runs can be completed in a matter of hours, rather than days. Figures 2 and 3 show how a geologic reservoir model can be coarsened for engineering evaluation.

Scot Evans of Landmark Graphics detailed how upscaling works and the value it creates for E&P companies in the May 17, 2004 issue of Oil & Gas Journal. He believes the new tools and methodologies he describes will lead to models that more accurately reflect the static and dynamic properties of a reservoir.

Improved visualization

Some remarkable advances in visualization technology have occurred recently in the oilpatch. Larger companies, including BP and Statoil, began experimenting with the creation of visual reality centers in the mid 1990s. The intent was to bring together asset teams in one location to examine the same data in three dimensions and to reach consensus decisions.

These visualization centers featured giant curved screens driven by supercomputers that allowed scientists and engineers to gather in groups to view 3-D and related data. Unfortunately, only the largest companies - primarily the national oil companies and the super majors - were able to afford this expensive new technology.

Smaller companies found it too costly to build and equip these large centers and too difficult to bring all the right people together at one time. The solution was remote visualization, but bandwidth limitations often made this impractical.

Fortunately, the latest computer processors have redesigned their architecture so that users can run visualization software and e-mail on the same system. And the good news is that these solutions are not prohibitively expensive. Linux, for example, has an operating system that is capable of performing feats today that only high-end technology was capable of doing several years ago.

Applications from companies like Schlumberger, Landmark Graphics, and Paradigm Geophysical can be supported with $10,000 workstations equipped with the right processors and operating systems rather than the $500,000 investment that was needed previously.

Nearly a decade has passed since the first visualization rooms were created and the falling cost of technology has made them practical for a larger number of companies that believe there is inherent value in bringing together geologists, geophysicists, and engineers under the same roof to share their thoughts and interpretations of data. However, many other companies say they are able to get the same results through remote visualization.

Techniques for mapping subsurface fractures

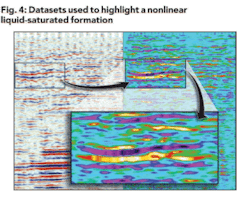

Writing in the Oct. 11, 2004 issue of Oil & Gas Journal, Tawassul Khan and Sofia McGuire of Nonlinear Seismic Imaging in Houston describe a new technique for mapping subsurface fractures using their elastic nonlinear response.

Khan and McGuire point out that the ability to delineate and accurately map the fractures in a formation is critical to field development strategies. Quite often, horizontal wells are used to improve production efficiency from fractured reservoirs. However, maps typically fail to show the orientation and location of the conducive faults, joints, and fractures, thereby creating a risk that water cut from a horizontal well may increase rather than decrease if the well is not correctly positioned.

Current seismic imaging technology is inadequate to reliably measure the location, orientation, spacing, and connectivity of the open fractures in reservoir formations. What they propose is a new method of detecting open natural fractures in the subsurface rocks, since fractures control the efficiency of producing hydrocarbons from more than half the reservoirs in the world.

Noting that the elastic properties of the rocks on either side of a fracture are very different from the fracture itself, a seismic wave that travels through the fracture experiences the effects of that localized elastic nonlinear anomaly. The elastic nonlinearity of the fracture changes according to the orientation of the fracture and the angle at which the seismic wave travels through it.

null

WEA technology

Another new development in seismic processing technology is wavelet energy absorption, or WEA - the latest in an evolving series of seismic industry direct detection technologies. The WEA methodology is based on the principal that gas-charged reservoirs absorb more seismic energy than non-gas-charged reservoirs due to seismic energy being propagated less efficiently through gas-charged media.

A key characteristic of WEA technology is that it generates a volume-oriented result. WEA can be used on either 2-D or 3-D data, and no well log information is required to calibrate the WEA result. WEA technology is the latest method in the continuing evolution of direct detection of hydrocarbons from seismic data, and it seems to have broader applications in successfully detecting a greater range of hydrocarbon reservoirs than other methods.

Seismic weight drop

And, finally, Houston-based Apache Corp. has teamed up with Polaris Explorer Ltd. in Calgary to develop a ground-based seismic impact source vehicle that compares favorably with conventional dynamite acquisition methods.

Trials with dropping weights from helicopters in northern Canada has led to the creation of the Explorer 860 buggy, a ground-based vehicle that has been used repeatedly to acquire 3-D and 2-D seismic data since January of 2004.

The vehicle’s hydraulic system generates a thump by forcing a cast steel weight against a base plate on the ground. Multiple units can be used at the same time.

The resulting seismic data have a signal-to-noise ratio as good as or better than conventional dynamic source records in the same area, said Apache’s Mike Bahorich, a former president of the Society of Exploration Geophysicists.

The potential for cost saving appears to be as much as 40%, said Jim Ross, a geophysicist with Apache Canada. In addition, the environmental footprint is greatly lessened because dynamite is not used, shot holes need not be drilled, and fewer trees need to be cut down.

Conclusion

Progress in gathering, processing, and interpreting seismic data has been remarkable in recent years, as has been the industry’s ability to improve reservoir management techniques. Powered by the large research and development budgets of the majors, companies have pioneered common depth point imaging, 3-D and multicomponent data acquisition, geophysical workstations, and room-sized interpretation centers where geologists, geophysicists, and engineers can immerse themselves in data.

Recent progress in depth imaging, pre-stack interpretation, and 3-D visualization will certainly continue with more sophisticated algorithms and more powerful computers. $