Live earth models digitally exchange data in reservoir simulators

Menhal A. Al-Ismael

Ali A. Al-Turki

Abdulaziz M. Al-Darrab

Saudi Aramco

Dhahran

Reservoir simulation is a cornerstone in field development planning. It uses mathematical and physics-based formulations describing fluid flow behavior in reservoirs. Reservoir simulation tracks pressure and fluid saturation changes with time, to better assess development plans and recovery. Simulation models use a variety of data such as seismic, logs, fluid characteristics, and petrophysical properties.

Historical field and well performance data are crucial inputs to maintain and history-match numerical reservoir simulation models. A well-executed history matched reservoir simulation can assess development scenarios, estimate recovery, and develop business plans for sound operational and strategic decisions. Improving predictability of the reservoir simulation models requires investing a greater amount of time and effort into gathering and quality checking field data, as well as model calibration and history matching. The simulation model is maintained by updating historical data and events, such as new rates (allocations), pressure data of existing wells, or recently drilled and completed wells.

Inclusion of recently acquired data may require model re-calibration or a re-history matching effort, especially if there have been good amounts of historical data accumulated or major activities or events undertaken in the field.

Massive amounts of well data gathered from onshore and offshore fields are available from different data sources and captured in one of several ways in numerical reservoir simulation models. Many data types describe different well configurations and operational constraints, which are of key importance for building and maintaining reservoir simulation models. Massive volumes of data are handled in data gathering, quality checking, and preparing proper format for different reservoir simulation processes. The challenge of integrating this amount of data in simulation models increases with the complexity of well designs and configurations as well as differing field operational constraints, such as conventional completion practices or advanced and smart completion technology.

Quality of historical well data are another challenge and a major factor impacting model uncertainty. The quality of well data varies between the different data types, fields, measurement frequencies, and workover activities. Each well’s data type is collected and measured differently. Manual procedures also might be involved in the process, potentially introducing human errors or bias.

Engineering, scientific measures

Previous work has focused on wells and reservoir simulation data.1-4 Numerical reservoir simulation encompasses different objects to form a simulation model. Rock physical properties, fluids quantities, well specifications, etc., are obtained in differing formats with varying ranges of uncertainty, making it challenging to manually perform tasks such as building new reservoir simulation models or maintaining existing ones. For this reason, workflows are established to automate different processes for data acquisition, building, and updating simulation models.

This article focuses on historical well information, which is a critical segment in simulation modeling. Wells in simulation models express fluid production and injection, eliciting fluid dynamics within the model. Well data validations and quality control (QC) are of significant importance.

Well data types

Reservoir simulators require historical production and injection rates to match numerically calculated reservoir energy, along with historical rates of well and field performance. The simulator also requires discretizing perforations and open-hole sections into the model’s resolution. Well events such as plugs, squeezes, and reperforations also are required to maintain an accurate history. These data describe interaction between wells and reservoir and define entry sections for fluid transport in or out of the reservoir. The data are usually available in relational databases in the form of a continuous space; i.e. XYZ universal transverse Mercator (UTM) coordinates, where X, Y, and Z define the three axes of a 3D space.

Perforations, open-hole sections, plugs, and squeezes are stored in data repositories with standard reference depths. Since numerical reservoir simulation discretizes the reservoir to solve fluid flow equations, well-related data preprocessing is required to translate measured depth and true vertical depth to discrete representations using the IJK mesh format; I, J, and K defining three axes for the 3D discretized grid space.

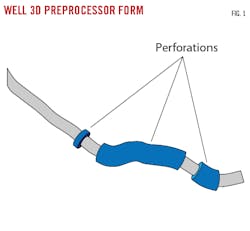

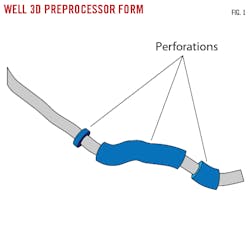

Fig. 1 depicts an example of a well trajectory and perforations when represented in a continuous 3D XYZ space. Fig. 2 depicts the same well but represented in a discrete 3D IJK. Comparing the two demonstrates how well events transform from one space (domain) to another; i.e. from data repositories to pre-simulation processes and finally to reservoir simulation models.

Although the simulator requires main historical well information including perforation sections and production and injection rates, other well data types also are required to continually update and validate evergreen simulation model input data. These include deviation surveys showing the intersections of wells with grid cells in the simulation model. The preprocessor identifies the intersected grid cells with perforations. It also calculates the ratio factor at each perforated grid cell to indicate how much of that grid cell is really perforated. The ratio factor is required by the simulator to minimize loss of details as a result of discretization.

Historical casing and tubing data are also required when modeling wells completed using advanced techniques including smart control valves and equalizers. Such wells are referred to as complex wells due to the advanced downhole equipment used, complex completions, and segmentation in modeling.5

Complex wells are defined differently in reservoir simulation models in which each well is multisegmented from wellbore all the way to surface. The variety of definitions enables more accurate pressure drop calculations considering frictional losses, gravity, smart completion nozzle restrictions, and other factors. Complex well historical data must be accurately incorporated into the preprocessor, including casing, liners, tubing, packers, inflow control valves, and inflow control devices (ICD). Each data type has important parameters required by the simulator such as depths, diameters, and nozzle sizes.

Formation tops are another important piece of well information for reservoir simulation and are used to validate subsurface formations and perform stratigraphic investigations. Formation tops are interpreted from well logs and are used to quality check seismic surfaces and geology. History matching involves analyzing more historical well information such as repeated formation tests, production logging, core data, and productivity and injectivity index data. These types of data are crucial for improving history-match quality and understanding flow behavior in the wellbore.

Data QC workflow

Each data type previously mentioned is associated with some level of uncertainty. Various tools collect field data, followed by human intervention during which some data are entered manually before transferring from field to central repository. Some of the data require interpretation before transfer to the repository. Data are subsequently collected from the repository and incorporated in pre-processing tools for analysis and preparation for numerical reservoir simulation models. This journey of cross-domain data transmission has multiple layers of processing, which can cause data alteration or loss due to human errors or incompatible technologies. Data must be validated through a set of QC procedures.

Working on giant reservoirs involving thousands of wells and multiple data repositories makes it impossible to manually perform individual well data checks. The workflow discussed in this article consists of a QC module that automatically validates different well data types before collecting them from the data repository.

The QC module methodology consists of two main layers. The first layer compares data between different data sources. If any data type has information in multiple data sources, the workflow ensures consistency by comparing specifications. The second layer involves validating the data against a set of rules, expected data ranges, and thresholds to ensure that values are reasonable and exclude any outliers.

Each data type has its own set of QC rules and acceptance criteria. Some data, like deviation surveys, have a very dense frequency in the data repository, including thousands of records for one well. The module provides efficient crosschecks on this massive amount of data and detects any possible issue before using the data in the simulation model.

For example, one of the validation checks compares start depth in the deviation survey table with wellhead point in the wellhead table. Repetition of the trajectory points or unexpected gaps between two consecutive trajectory points are also detected by the workflow. Sudden changes in drift angle or dogleg severity also are checked and flagged.

Another check validates casing and tubing data. The length of casing and tubing strings is validated against the addition of accessories—any pipe or completion section composing the casing or the tubing lengths—with each casing or tubing.

Accessories are also validated by checking their depths, ensuring no overlap, and checking that OD is greater than ID. Other QC check examples include ensuring correct assignment of completions to their respective lateral, checking the length of completion accessories, and ensuring that the tubing removal date is not later than the landing date of the next tubing.

Perforation data also is validated. For example, one check compares different repositories to ensure inclusion of all perforations and capture of accurate lateral assignments. Similar QC checks and rules are applied to different types of well data, including production and injection rates, real-time data, flow meters, productivity and injectivity, cores, formation tops, etc.

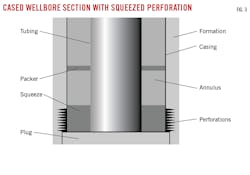

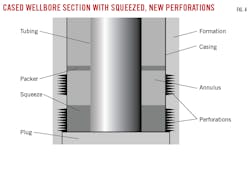

Figs. 3-4 demonstrate an example of a well with missing perforations. Fig. 3 illustrates squeezed perforation isolating wellbore from formation. Fig. 4 shows the same well, but with the addition of another perforation after the squeeze job. The additional perforation in Fig. 4, if missed, will cause the well to be misrepresented and generate inaccurate simulation model data. QC detects such missing data simply by checking production and injection rates. A totally plugged well should have no production. But the well production profile could show otherwise, indicating missing perforations. QC prepares a report with detailed analysis and submits it to data management for further review and proper course of action.

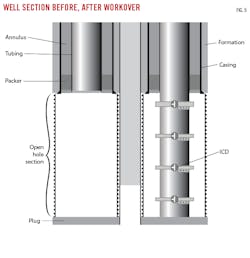

Fig. 5 shows a schematic of another potential data inaccuracy and implications of inaccurate well input data in the simulation model. The horizontal well in this example was put in production as an open hole until the water cut reached acceptable operational limits. A workover job was performed to control water by placing ICDs to equalize production and minimize water cut. Updating the simulation model with the workover event accurately represents the well with ICDs in correct locations (XYZ UTM coordinates) using correct specifications.

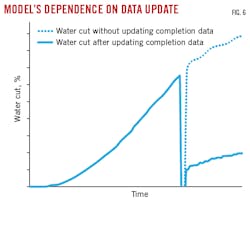

Simulation results in Fig. 6 show the impact of accurately updating water cut in the simulation model. If not updated properly, well behavior will be misleading and lead to unwanted consequences.

Integrated framework

Simulation well information is retrieved from the data repository using the workflow described in this article via conventional data export and import and automated data transmission.

The simulation workflow provides the engineer with an interface to data repositories, accessing, retrieving, formatting, and checking data in various formats which can be read by all pre- and post-processing and simulation applications. Simulation starts by accessing the application via credentials and then proceeding through several steps to extract required data in a specified format. Fig. 7 is a diagram of the conventional workflow for transferring simulation well data.

Data-transfer automation

Development of backend services to expose functionalities of the workflow to other applications through seamless communication allowed efficient use of the described engine. This transfer workflow provides new automated ways of accessing simulation well information.

The new framework provides an integration solution that automates acquisition of well data and export and import processes. The framework also is meant to provide a data exchange solution that meets the specialized needs of exploration and production professionals, providing a more effective and efficient method of connecting diverse applications and data repositories.

Backend services development allowed pre-processing applications to communicate with different data sources to load new information or update existing historical wells. The framework provides an integrated solution that automates updating simulation models with minimal human intervention.

Fig. 8 shows interaction between backend services, data repositories, and different applications. This framework has great impact on the time required to update well information in the simulation models. It avoids the need to access multiple applications and perform multiple operations to export and import data. Engineers also do not need to worry about different file formats used by different applications.

The framework avoids the need for manual editing and handling of huge files, preventing human errors and providing accurate well data updates, thereby helping improve overall accuracy and confidence in simulation results. Furthermore, the seamless communication uses advanced data security and management that prevents exposing well information.

References

- Shideed, M.A., “Creative Utilization for Oracle Database to Meet Petroleum Engineering’s Needs,” SPE 93279, SPE Middle East Oil and Gas Show and Conference, Manama, Bahrain, Mar. 12-15, 2005.

- Al-Ismael, M.A., Al-Khawaja, H.A., Al-Quhaidan, Y.A., Nooruddin, H.A., Shedid, M., “Automation of Well Modeling and Data Validation for Reservoir Simulation,” IPTC 17541, International Petroleum Technology Conference, Doha, Qatar, Jan. 19-22, 2014.

- Al-Ismael, M., Al-Khawaja, H., Al-Nahdi, U., and Akhtar, M.N., “Well Completion Data Adjustment Workflow for Reservoir Simulation,” IPTC 17734, International Petroleum Technology Conference, Kuala Lumpur, Malaysia, Dec. 10-12, 2014.

- Al-Zahrani, T., Al-Mulla, M., and Al-Nuaim, M., “Automatic Well Completions and Reservoir Grid Data Quality Assurance for Reservoir Simulation Models,” SPE 175623, SPE Reservoir Characterization and Simulation Conference and Exhibition, Abu Dhabi, Sept. 14-16, 2015.

The authors

Menhal A. Al-Ismael ([email protected]) is a petroleum engineering systems analyst working in the simulation systems division of Saudi Aramco’s petroleum engineering applications services department. He holds a BS in information and computer science and an MS degree in petroleum engineering, both from King Fahd University of Petroleum and Minerals (KFUPM), Dhahran, Saudi Arabia. He is an Society of Petroleum Engineers (SPE) certified petroleum engineer.

Ali A. Al-Turki ([email protected]) is a petroleum engineering systems specialist working in the simulation systems division of Saudi Aramco’s petroleum engineering applications services department. He holds a BS in computer science from KFUPM and received both MS and Ph.D degrees in petroleum engineering from the University of Calgary. He is an SPE certified petroleum engineer.

Abdulaziz M. Al-Darrab ([email protected]) is a petroleum engineering systems analyst working in the simulation systems division of Saudi Aramco’s petroleum engineering applications services department. Abdulaziz holds a BS in software engineering from KFUPM and an MS degree in petroleum engineering from the University of Southern California. He is an SPE certified petroleum engineer.