LEAN ENERGY MANAGEMENT-5: Enterprise-wide systems integration needed in ultradeepwater operations

In the first four parts of this lean energy management (LEM) series we have laid out the logic for adoption of lean management techniques common to other manufacturing industries like automotive and aerospace to cut cost and cycle time in the ultradeep water.

Use of project life-cycle processes, systems engineering analyses, real options, feature-based design, virtual manufacturing, and supportability are all required for LEM to be successful.

We have also shown that the deepwater industry, in particular, must abandon the net present value (NPV) economic model in favor of a more sophisticated real options methodology that is more adaptive to the uncertainties of cost, commodity price, and reservoir performance variability over time.

Now we turn to the most important component of LEM—all these techniques must be integrated into one enterprise-wide system (Fig. 1). This article discusses the additional technologies and processes that such widespread integration requires.

Capex, opex effects

LEM affects not only the capex involved in lean design and construction of production facilities, but also the opex required for lean operation of oil and gas fields over the life of each asset.

These must be combined with lean management of the portfolio of properties that extends to the entire holdings of the corporation. Consequently, adoption of lean management processes and tools must be driven from the very tops of all "owner" organizations, downward to all stakeholder companies. This has proved the most difficult constraint to successful adoption of LEM in the upstream.

Successful lean management systems involve software rigor that forces transparency in all design, construction, and operational activities of the owners, operators, and subcontractors. The elimination of many avenues for corruption follows automatically. Communications is maintained by the sharing of 3D solid models of all phases of each project with all stakeholders.

Of these models, the subsurface reservoir simulations are the hardest for oil companies to share with their subcontractors and partners, but lean experiences in other industries have dramatically reinforced the requirement that all information related to uncertainty in performance must be continuously shared if maximum efficiency is to be attained.

As an additional example of the need for more openness and transparency, consider the need for modular construction in the design and build stages of major facilities construction. This modularity is needed to give the real options economic model choices during the operating stage for responding to changing performance metrics to maximize profitability in the face of uncertainty.

Since price and reservoir performance have both proved impossible to predict, the need for continuous reevaluation of requirements for not only design and build but also for operations must be maintained throughout the life of each project. Not only improved but also faster decisionmaking results, along with reduced waste from bad communications among team participants.

Experience in a wide variety of manufacturing industries has shown that if such lean methodologies are adopted throughout the organization, breakthrough savings result (c.f. http:// lean.mit.edu). Not only is cycle time reduced but also material and labor costs are reduced, often by half, and time to first oil is shortened dramatically, as well1 (Fig. 2).

Enterprise-wide systems integration

The resulting early delivery of first oil and gas to market (not to be confused with pre-drilling production) that accompanies dramatic cost and cycle-time savings makes the use of LEM for design and build activities obvious.

We have found, however, that it is the increased profitability from lean management of production operations that is the more difficult argument for upstream operators to accept. In fact, it is the connectivity between the design, build, and operate "silos" that provides the greatest economic benefits from LEM. This linkage drives a need for the upstream industry to adapt enterprise-wide systems integration techniques from other industries, and from their own downstream, interestingly.

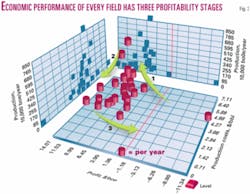

All oil and gas fields proceed through three phases of profitability during their life cycles (Fig. 3). They all begin with "sunk costs" because of the necessary investment of cash up front to create the infrastructure necessary for production in the first place (Phase 1).

As the years go by, properties enter a strong profitability stage (Phase 2). This stage of most fields lasts long after peak production is realized because production and reservoir engineers are given strong incentives to maximize short-term cash flow. Then, the inevitable decline in production overwhelms even the best of fields, and profitability is lost even when costs are controlled (Phase 3).

If the same LEM methodologies that were used in the design and build phases were to be employed in the operational phases of all fields, this profit-to-loss cycle could be broken. In fact, lessons that petroleum companies learned in the downstream point to just such successful ways out of this vicious cycle.

However, coming from the lowest margin parts of the business, these lean lessons have been slow to migrate to the highest margin operations in the upstream. In the refining and petrochemicals businesses, margins are so slim that process engineering has been carried to a high art. Systems are integrated, plants are managed with state-of-the-art software systems, and personnel are always cognizant of the profitability of their decisions. "Total plant" integration of operations is preached throughout the "control loop," a necessity when margins hover at less than 5% ROCE.

These lessons have not been transferred to the more lucrative upstream, particularly in the deepwater, where margins are often 30% or better. Consider a major software innovation that downstream has adapted that upstream does not yet utilize: "reinforcement learning," a major component of the LEM economic model (Fig. 4).

Simulations of the "total plant" are used to maximize profitability, and they are re-run, all day, every day, because inputs to the control center are changing constantly. Actions of the controller define the chemical processes executed, just as in upstream production, but the outputs from sensors monitoring progress of the process in the downstream are constantly compared to a "critic." It, in turn, compares reality with metrics for performance previously agreed to by all stakeholders.

This SCADA (supervisory control and data acquisition) monitoring is slowly spreading to the upstream, but as one-way signals into the home office. There, the results are compared against "plan." What's different about the LEM reinforcement learning loop in the downstream is that the evaluation process results in comparisons to the predicted outcomes from the model of the plant performance. Responses and changes to operational procedures are then broadcast back to the plant to optimize performance in a classic feedback loop.

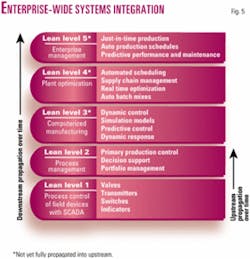

The downstream is migrating from "plant optimization" (lean level 4) to enterprise-wide management at this time (Fig. 5). Petrochemical plants, in particular, have now advanced fully to this lean level 5. The upstream is only migrating from process management (lean level 2) to computerized manufacturing (lean level 3).

The subsurface is just beginning to routinely use 4D or time-lapse monitoring of drainage of reservoirs as its SCADA to track variability in the subsurface and to compare that to reservoir simulations of what was predicted. This 4D management results in the first upstream optimization process that could be called "reinforcement learning." Again, reinforcement learning uses software to constantly compare such expected model outcomes with actual results, a key ingredient of LEM.

Reinforcement learning is best thought of as a form of robust adaptive control that takes actions in the face of uncertainties, from market volatility to reservoir uncertainty. Its results are policies (strategies) for actions given the uncertainties in the controlled process. It learns as it controls and adapts over time, even to changing uncertainties.

An example of LEM for an operational field would be "base load" production management, as discussed in part 4 of this series (OGJ, Aug. 25, 2003, p. 56). Many engineering decisions can be managed to control the shape of the production profile over time in a field, but incentives are not yet present to drive these decisions with a real options economic model.

Whereas the usual production practices are designed to maximize flow rates at all times, commodity prices may dictate variances in the mix among oil and gas (profitable) and water (both injection and produced water are expenses). In part 4, we have pointed out how real options valuation and robust stochastic control are mathematically exploiting this variability.

Consider as an analogy the thermostat that controls temperature in an office or house. It is usually set by you based upon the most simple of models—how comfortable you are. Several smart or adaptive house and building projects are currently operational around the world that use robust adaptive control methods including reinforcement learning to manage costs and improve energy efficiency while still keeping occupants comfortable. A computer is constantly checking the temperature against metrics that include cost of the heating and cooling, whether anyone is in the room, is it night or day, etc.

Propelled by what is becoming known as "real-time business" or "wiring the enterprise," this same type of reinforcement learning can be elevated to enterprise-wide management for the whole corporation.

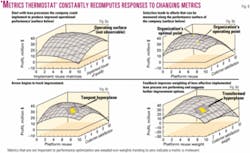

Take for example, the "metrics thermostat" developed by Hauser at the Sloan School of Management at MIT. The metrics thermostat (MT) is actually an economic model that begins with the setting of metrics by top management (Fig. 6a). (MT can be considered an on-line, wired, enterprise-wide approach to balanced scorecards.)

Then, software continuously compares this information to those metrics (Fig. 6b). Suggested directions are sent out that manage profit (such as cost in our simple example) against what in lean management terms are called "reuse matrices" and a "house of quality"—another Hauser innovation (see the 3D surface in "a" of Fig. 6). This surface is defined by the "data historian" that remembers what the room was like last week, last month, last year, and a matrix with all the parameters that affect the quality-of-life in the room.

What's new about reinforcement learning is that the software constantly improves on the performance in the data historian by driving performance to "climb the hill" to the peak in this 3D surface. Platform reuse considers what might be recycled, customer satisfaction builds databases for each occupant of the room; for instance, grandma might like it hotter (Fig. 6c). Improvements in this multidimensional space ultimately result not only in better performance, but also in changes to the metrics themselves (thus the reinforcement of metrics in Fig. 6d).

For the metrics thermostat, an actual model that can simulate the "operating surfaces" of a company is not needed. In the control loop, experiments (actions taken) along with changes in corporate metrics are absorbed into the historian and new response directions are generated by the hyperplane approximation of the MT. The gradients (arrows along surfaces in Fig. 6) tell management in what directions to take new actions.

In artificial intelligence problems, such as game playing software like backgammon or chess, or robotics, such as programming a Martian rover. This is known as a solution to the "credit assignment" problem.

Similar reinforcement learning is directly transferable to better LEM of oil and gas fields once there is sufficient SCADA to provide real-time monitoring of what is actually occurring in both the subsurface and the marketplace.

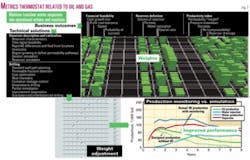

Oil field engineering decisions can be managed in different sequences and with varying importance depending upon which performance metrics are selected. Reinforcement learning can be used to set sliders that provide feedback loops from the engineers to the performance monitoring software (Fig. 7).

These sliders, in turn, feed a neural network (stack of linked spreadsheets) that links problems encountered in day-to-day operations with prioritized actions to correct these problems that are based upon reinforcement learning.

This is how economic maximization using real options can be brought into the operations control loop. If additional production is needed by the real options economic model, then more expensive remediation may be justified versus that determined by a cost-minimization model (Fig. 7). Base-load decisions for one field can be varied with peak-load decision in others.

Although these methods may seem overly complex, they are being used widely in other industries as real-time business becomes a reality. For example, "codebooks," akin to the matrices shown above, are used for real-time, root cause analysis of network failures on the Internet (c.f. http://www. smarts.com). The petroleum industry must adapt such enterprise-wide lean energy management practices if we are to remain profitable in the constantly more difficult and complex petroleum industry of the future.

Reference

1. Esser, W., and Anderson, R., "Visualization of the advanced digital enterprise," OTC 13010, Offshore Technology Conference, 2001.

Bibliography

Anderson, R., Boulanger, A., Longbottom, J., and Oligney, R., "Lean energy management—1: Lean energy management required for economic ultradeepwater development," OGJ, Mar. 17, 2003.

Anderson, R., and Boulanger, A., "Lean energy management 2: Ultradeepwater development—designing uncertainty into the enterprise," OGJ, May 19, 2003.

Anderson, R., and Boulanger, A., "Lean energy management 3: How to realize LEM benefits in ultradeepwater oil and gas," June 30, 2003.

Anderson, R., and Boulanger, A., "Lean energy management 4: Flexible manufacturing techniques make ultradeepwater attractive to independents," OGJ, Aug. 25, 2003.

Anderson, R., Boulanger, A., Longbottom, J., and Oligney, R., "Future natural gas supplies and the ultra deepwater Gulf of Mexico," Energy Pulse, March 2003, online at: http://www. energypulse.net/centers/article/article_display.cfm?a_id=232.

Hauser, J., and Clausing, D., "The House of Quality," Harvard Business Review, May-June 1988, pp. 63-73.

Hauser, J., and Katz, G., "Metrics: You are what you measure!" European Management Journal, Vol. 16, No. 5, 1998, pp. 516-528.

Hauser, J., "Metrics thermostat," Journal of Product Innovation Management, Vol. 18, Issue 3, May 2001, pp. 134-153, online at:

(http://www.sciencedirect.com/science/article/B6VD5-433PDB1-2/2/ 46a05a9b89a82581f446fc0cbcf7df3f).

The authors

Roger N. Anderson ([email protected]) is Doherty Senior Scholar at Lamont-Doherty Earth Observatory, Columbia University, Palisades, NY. He is also director of the Energy and the Environmental Research Center (EERC). His interests include marine geology, 4D seismic, borehole geophysics, portfolio management, real options, and lean management.

Albert Boulanger is senior computational scientist at the EERC at Lamont-Doherty. He has extensive experience in complex systems integration and expertise in providing intelligent reasoning components that interact with humans in large-scale systems. He integrates numerical, intelligent reasoning, human interface, and visualization components into seamless human-oriented systems.